AI-ght, let's learn about Artificial Intelligence

News about AI has been talked to exhaustion over the last couple of years, but rarely do I find a simple source for most of my queries...specifically, the basics. This post will talk about the following topics: what is AI, use cases, hardware companions, and LLMs.

What is AI

AI or Machine Learning?

AI (or Artificial Intelligence) was coined by Dartmouth researchers in the 1950s, during a workshop about the future of computers. We picture Terminator-style killer robots that can out-think a human at every turn. And you know what? You're absolutely correct (to some extent).

AI, now referred to as AGI or ASI, is the free-thinking intelligence of a synthetic mind. Artificial General Intelligence (AGI) refers to machine intelligence that matches the cognitive abilities of our human mind, including reasoning, problem-solving, learning, and understanding complex concepts across a wide range of knowledge foundries. However, because our current scientific understanding of the human brain and its processes is still incomplete, achieving true AGI remains a significant challenge. This gap in knowledge suggests that it will likely take considerable time and further advances in neuroscience and artificial intelligence research before we are able to both create and fully understand AGI systems at a human-equivalent level.

Artificial Superintelligence (ASI) takes it a step further; still hypothetical, it is a form of artificial intelligence that surpasses human intelligence in every knowledge field, including reasoning, creativity, problem-solving, emotional understanding, and social skills. Unlike AGI now, which would match human cognitive abilities across a range of tasks, ASI would surpass the abilities of even the most talented human minds in virtually all areas, likely faster, more precise, and with ideas/concepts beyond our comprehension. The development of ASI is considered by most experts to be contingent on first achieving true AGI. Once AGI is realised, it is theorised that it could see it rapidly evolve by itself, increasing general cognitive superiority, and the capacity to address complex challenges far beyond current human ability.

The term AI used right now is purely a marketing term to hype up advanced Machine Learning (ML). Machine Learning has been with us for decades in the form of language translators, facial recognition in your photos app, camera autofocus, and Chess against the computer. Recent advances in ML optimisation and techniques have been re-branded as AI as we know it today. ML is what humans would call "muscle memory" in the digital world. It is also the basis for all the AI tools we use on our devices, such as chatbots like ChatGPT and image generators like Dall-E; and the basis for all ML/AI is a branch of mathematics called linear algebra. Linear algebra is the foundation of machine learning, data science, computer graphics, and much more. But what exactly is it, and why is it so important?

Engine driving AI

Linear Algebra is king

What is Linear Algebra?

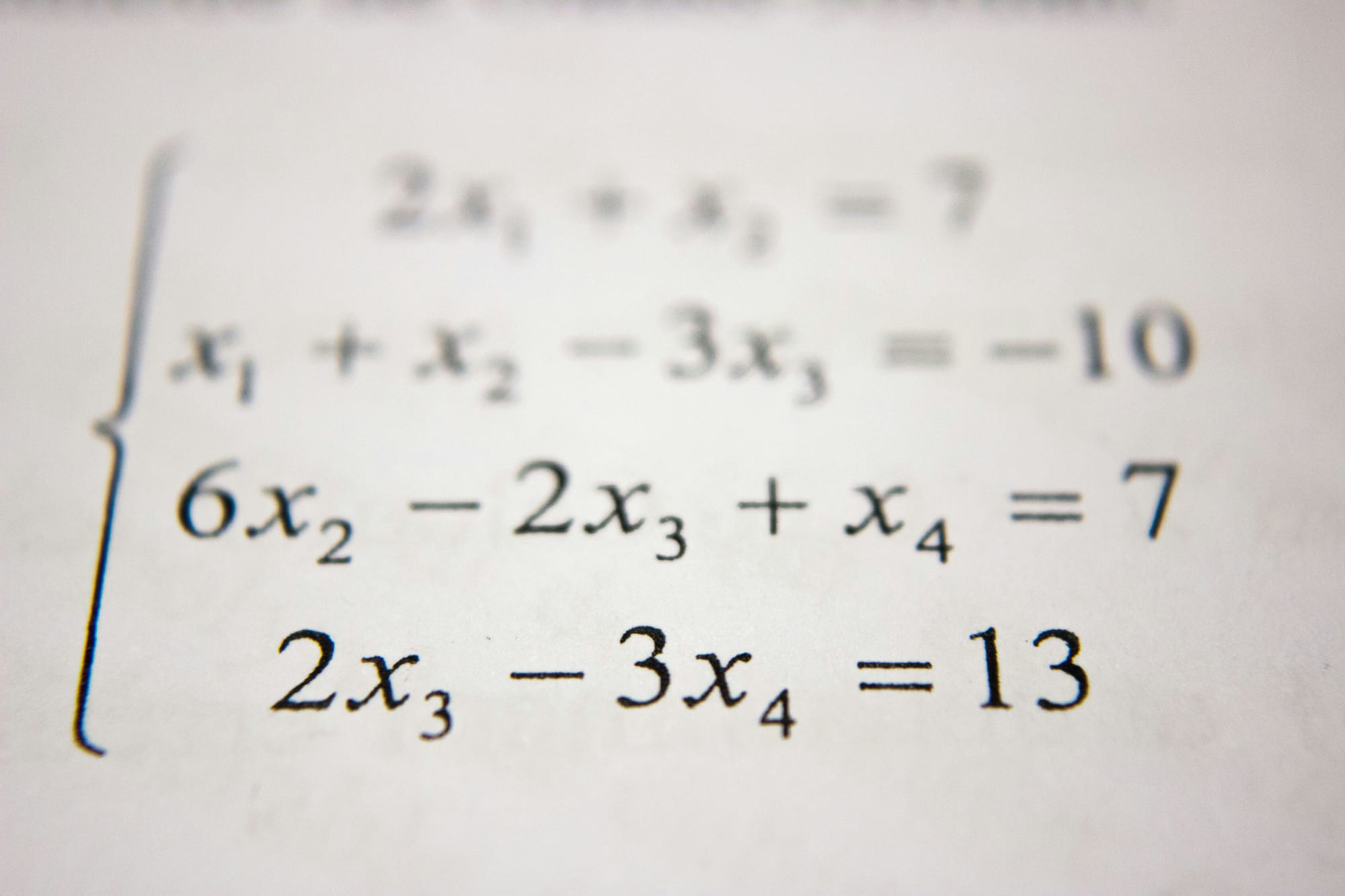

Linear algebra is the study of vectors, matrices, and linear transformations. It’s all about understanding how to represent and manipulate data; think of it as the maths that helps computers understand. The computers interpret the world in the form of data, whether those numbers represent pixels in an image, features of a dataset, or coordinates in 3D space.

How linear algebra is used in ML

A vector is like an arrow in space; it has both direction and magnitude (length). In ML, each row of a dataset can be thought of as a vector. For example, a vector might represent a user’s age, income, and spending habits.

A matrix is a grid of numbers, like a spreadsheet. In image processing, an image is often represented as a matrix of pixels. Each number in the matrix corresponds to the colour, brightness, or shape of a pixel.

A linear transformation is a function that takes a vector and outputs another vector. It can stretch, rotate, or reflect along the axis. When resizing or rotating a photo on your phone, you’re applying a linear transformation to the matrix of pixels.

The dot product of two vectors is a fixed value that quantifies the direction of the vectors. This is often used in recommendation systems (like Netflix or Amazon) to find similar items or users.

An eigenvector is a direction that doesn’t change when a transformation is applied; it merely scales as a multiple. The eigenvalue quantifies the scaling factor. Google's search algorithm uses eigenvectors to rank web pages.

How does this work for LLMs?

Large Language Models (LLMs) are used in the chatbots we use (e.g., ChatGPT, Gemini, Deepseek...etc). So how does it work?

- Words as Vectors: Every word is converted into a list of vectors that capture its meaning, thereby grouping similar words with similar vectors. For example, “king” = “man”, therefore “woman” ≈ “queen”.

- Sentences as Matrices: Sentences are turned into matrices of vectors, making it easy for the LLM to process a large volume of text at once.

- Attention Mechanism: LLMs use the matrix to decide which words are most important in a sentence, helping the model prioritise content based on context and word-based relationships.

- Neural Network Layers: LLMs stack these matrices over each other, like a lasagna; each layer transforms your input vectors into more usable outputs, thereby improving the ability of the LLM to understand context and generate better responses.

- Training the Model: Developers like OpenAI adjust their matrices and neural network by allowing the LLM to learn from mistakes mathematically, using linear algebra to update and improve predictions over time.

- Generating Answers: All these matrices are turned back into vectors, by processing them through layers, and are returned to you as words.

Please do subscribe to this blog. You'll get a notification when my next post is uploaded! In it, you will learn how to use LLMs locally on your on devices (untethered to the cloud) to take control of your AI!